Last Updated on by Azib Yaqoob

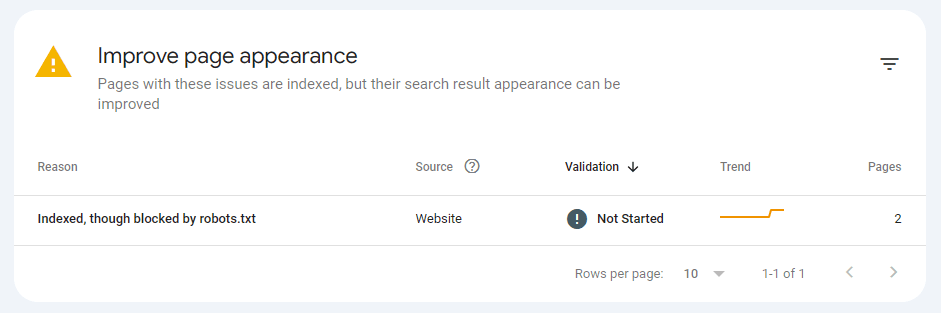

The “Indexed, but Blocked by Robots.txt” error in Google Search Console occurs when a URL on your website has been added to Google’s index, but access to it is restricted by the robots.txt file. This situation can confuse search engines and negatively impact your SEO performance, as it sends mixed signals about your site’s content.

In this comprehensive guide, I will explore the causes of the error, provide step-by-step instructions to troubleshoot and fix it, and share best practices for optimizing your robots.txt file.

Table of Contents

What Does “Indexed, but Blocked by Robots.txt” Mean?

When you see this error in Google Search Console, it means:

- Googlebot found the URL and decided to index it.

- The robots.txt file explicitly disallows crawling of that URL.

- The conflicting instructions create ambiguity about the page’s content, potentially affecting your site’s ranking.

Common Causes

- Misconfigured Robots.txt File: Specific rules in the robots.txt file prevent search engines from crawling parts of your site.

- Intentional Blocking: Pages or resources intentionally restricted (e.g., admin pages) may have been indexed accidentally.

- Dynamic URL Indexing: Search engines sometimes index URLs linked to restricted pages (e.g., parameters or duplicates).

- Legacy Rules: Outdated rules from older site setups might still be blocking valid URLs.

Step-by-Step Guide to Fix the Error

Step 1: Verify the Error in Google Search Console

- Log in to Google Search Console.

- Navigate to Coverage > Indexed, but Blocked by Robots.txt.

- Review the list of affected URLs:

- Identify which URLs are incorrectly blocked.

- Note any patterns (e.g., blocked sections or file types).

Step 2: Locate and Access Your Robots.txt File

The robots.txt file is typically located at the root of your website. For example:

https://yourwebsite.com/robots.txt

How to Access the File

- WordPress Plugin:

- If you use SEO plugins like Yoast SEO or Rank Math, you can edit the file directly from your WordPress dashboard.

- Go to SEO Plugin > Tools > File Editor.

- If you use SEO plugins like Yoast SEO or Rank Math, you can edit the file directly from your WordPress dashboard.

- FTP or File Manager:

- Log in to your server using FTP or a hosting control panel (e.g., cPanel).

- Navigate to the root directory (usually

public_html) to locate the robots.txt file.

Step 3: Analyze the Robots.txt Rules

Examine the rules in the file to identify which lines might be causing the issue.

Example of a Misconfigured Robots.txt File:

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-includes/

Disallow: /category/

Here’s what each rule does:

User-agent: *: Applies rules to all bots.Disallow: /wp-admin/: Blocks crawling of the admin area (this is normal).Disallow: /wp-includes/: Prevents crawling of WordPress core files (also normal).Disallow: /category/: Blocks all category archive pages, which might unintentionally block valuable content.

Step 4: Test and Debug the Robots.txt File

Use Google’s Robots Testing Tool to debug your robots.txt file:

- Go to Google’s Robots Testing Tool.

- Enter the URL of the affected page and test whether it’s blocked.

- If the tool indicates a block, locate the corresponding rule in your robots.txt file.

Step 5: Modify the Robots.txt File

To fix the error, remove or update the problematic rules. Below are common adjustments:

1. Allow Access to Blocked Sections

If legitimate content is blocked:

- Replace

DisallowwithAllowfor the specific path:User-agent: *Allow: /category/

2. Use Wildcards and Specific Rules

To unblock specific pages but keep others restricted, refine your rules:

User-agent: *

Disallow: /category/*

Allow: /category/specific-page/

3. Remove Overly Restrictive Rules

If the entire directory doesn’t need blocking:

# Remove this:

Disallow: /blog/

# Replace with specific exclusions:

Disallow: /blog/private/Step 6: Clear Caches and Resubmit Robots.txt

After updating your robots.txt file:

- Clear your website and server caches to ensure the new file is live.

- Verify the updated file by visiting

https://yourwebsite.com/robots.txt. - Resubmit the updated file in Google Search Console:

- Go to Settings > Robots.txt Tester.

- Submit the file and request a re-crawl.

Step 7: Use Noindex Tags for Specific URLs

If you want a page indexed but not crawled, use a noindex meta tag instead of blocking it in robots.txt:

- Edit the page in WordPress.

- Add the following meta tag to the page header:

<meta name="robots" content="noindex, follow"> - Save changes and ensure no conflicting robots.txt rules exist.

Step 8: Monitor the Changes

After implementing fixes:

- Return to Google Search Console.

- Validate the fix for affected URLs under Coverage > Indexed, but Blocked by Robots.txt.

- Monitor the URLs for updated status reports.

Best Practices for Managing Robots.txt in WordPress

- Keep it Simple: Avoid overly complex rules that may unintentionally block valuable content.

- Block Irrelevant Sections:

- Common exclusions:

Disallow: /wp-admin/Disallow: /wp-includes/

- Common exclusions:

- Enable Sitemap Access:

- Always allow search engines to access your sitemap:

Allow: /sitemap.xml

- Always allow search engines to access your sitemap:

- Test Regularly: Use the Robots Testing Tool periodically to ensure rules are correctly applied.

- Combine Robots.txt with Noindex: Use noindex tags for finer control over indexed content.

Conclusion

The “Indexed, but Blocked by Robots.txt” error can hinder your SEO efforts, but it’s manageable with proper troubleshooting. By carefully analyzing and updating your robots.txt file, testing your changes, and monitoring Google Search Console, you can resolve the error and ensure your site performs optimally in search results.

Regular maintenance and adherence to best practices will prevent similar issues from recurring, keeping your WordPress site in good health and search-friendly.

Let me know in the comments if you face any issues.