Last Updated on by Azib Yaqoob

If you are new to SEO then you must have at least heard about robots.txt yet. I am going to tell you exactly what’s the purpose of robots.txt and how you can use it to improve your SEO.

Robots.txt is a simple text file that you create and place on your website to instruct web crawlers (or the search engine bots) to access different web pages on your site.

Robot.txt file is an important part of REP (Robot Exclusion Protocol). This protocol is a group of web standards that regulate the procedure of robots crawling the web, access the content, index it, and serve it to the users.

Importance of Robots.txt

Although it is a simple small text file, it could cause disaster to your online presence. If somehow, you get the wrong file up, a red signal will go for the search engine robots that they are not allowed to crawl on your site.

It means that your web pages will not appear on SERPs. Therefore, it is not only important for you to understand the purpose of a robots.txt file with SEO perspective, but you also need to learn how you can check whether you are using this robots.txt file correctly or not.

If you don’t want the search engine bots to crawl “certain” pages of your site, your robots.txt file will be responsible to give out this instruction to them.

Let’s say, you don’t want any of your images to be listed on the search engine, you can block search bots by simply using a disallow directive in your robots.txt file.

How does Robots.txt Work?

When search engine bots try to index your site, they first look for a robots.txt file in the root directory. This file contains the instructions on which pages they can crawl and index them on SERPs, and which they can’t index. If you face indexed, though blocked by robots.txt error then read this guide.

You can use robots.txt file to:

- Let the search bots ignore any duplicate web pages on your site

- NoIndex internal search result pages of your website

- Limit the bots to index certain parts of your site or the whole website

- Disallow search bots to index certain files present on your site, e.g., images and PDFs

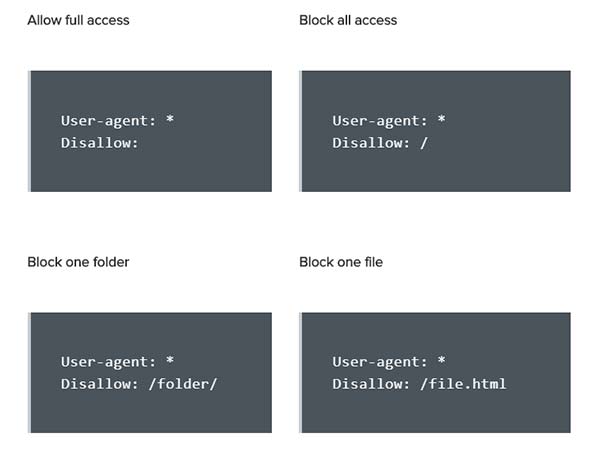

Examples of a Basic Robots.txt

See some examples of basic robots.txt files in the image below:

Check the Priorities of your Website:

When it comes to robots.txt files, you have three options. These options are:

- Check if your site has a robots.txt file

- If you already have one, ensure that it is not harmful to your site, or blocking your content that you don’t want to be blocked

- Determine whether your website need a robots.txt file

How to Check if Your Site is Using a Robots.txt File?

You can use any browser to check if your website has a robots.txt file or not. You just need to add /robots.txt at the end of your domain name. Just as it is shown in the image below:

Is your Robots.txt File Blocking Important pages?

With the help of Google Search Console, you can check if your robots.txt file has blocked certain page resource files which are required by Google to fully understand your web pages.

Does your Website Need a Robots.txt File?

It is not necessary that your website has a robots.txt file, and it is totally fine as well. In many cases, you don’t need a robots.txt file.

Reasons you may Need a Robots.txt File:

Following are some reasons because of which your website may need a robots.txt file:

- Your website has some content that you don’t want to be indexed by search engines

- Your website contains certain paid links or ads that have special instructions for robots

- Robots.txt files may help you follow some guidelines from Google in certain situations

Reasons you may not Need a Robots.txt File:

Some reasons because of which you may not need a robots.txt file are:

- You have a simple website structure

- You don’t have sufficient content on your site that you want to block from search engines

If your website doesn’t have a robots.txt file, search engine robots will have an easy and full access to your website. This practice is very common.

How to Create a Robots.txt File?

If you want to create a robot.txt file, you can create one easily. This article from Google helps you in understanding the whole process.

Usually, people want every part of their website to be indexed by search engines. To make it possible, you have three options:

Don’t create a Robots.txt File:

When a search engine robot visits a website, and it doesn’t find any robots.txt file there, it feels free to visit all pages of the website.

Create an Empty File named as Robots.txt:

When your website has a file named robots.txt but it doesn’t contain any content, the robots crawl each web page.

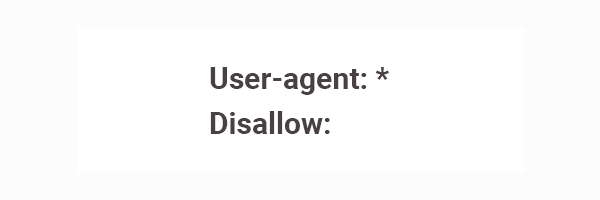

Save a Robots.txt File with only Two Lines:

If you want the search engine robots to crawl every page of your website, you can simply save a robots.txt file with the two lines shown in the following image:

After reading these two lines, the robot will automatically read all your web pages.

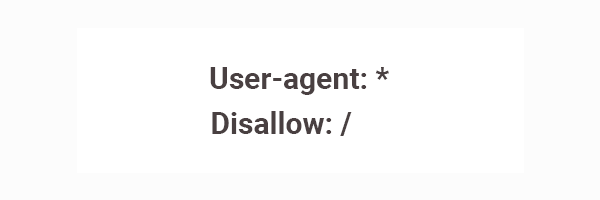

What if you Don’t Want Any of your Content to be Crawled?

This is a risky thing to do, as it means that none of your web pages will be indexed by any search engine. If still, you want to do this, you can use the following instructions:

Robots.txt could be playing an important role in determining the position of your website on SERP. This is the reason, you need to handle this simple but important file carefully.